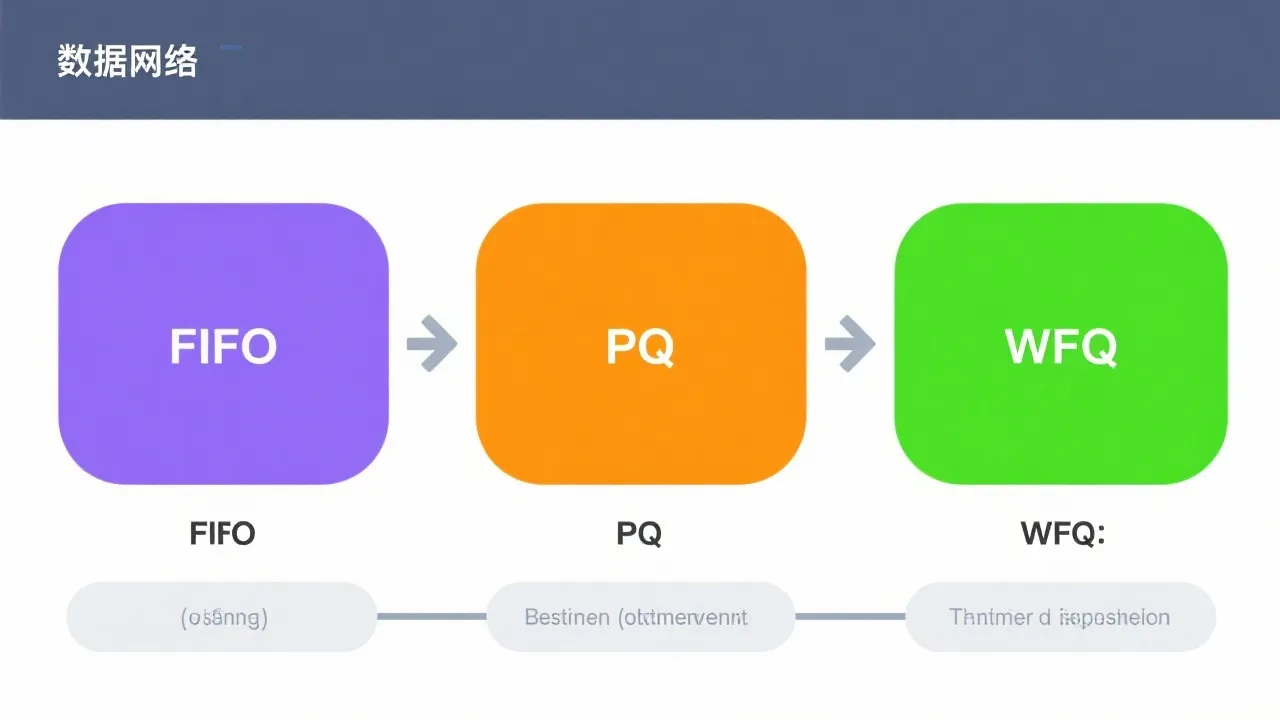

This article explores three fundamental scheduling algorithms in networking: Fifo, Pq, and Wfq. In computer networks, these algorithms play crucial roles in managing data packet flow to optimize performance and efficiency. Understanding their differences and applications helps professionals choose the top strategy for varying network requirements, ensuring optimal data traffic management.

In the realm of computer networking, data packet management is a critical aspect that influences the performance and efficiency of data flow. Among the various scheduling algorithms employed, First-In-First-Out (FIFO), Priority Queuing (PQ), and Weighted Fair Queuing (WFQ) are prominent. These algorithms determine the order in which packets are processed, impacting the overall quality of service (QoS) within networks. This article delves into the intricacies of these scheduling algorithms, providing insights into their mechanisms, advantages, and applications in networking environments.

Networking efficiency is vital for the smooth operation of modern communication systems. With the rise of applications requiring rapid and reliable data transmission—such as cloud computing, online gaming, and real-time video streaming—the role of scheduling algorithms has become increasingly significant. Effective data packet management ensures that resources are utilized optimally while minimizing delays and maximizing throughput. In this context, the choice of scheduling algorithm can lead to dramatic differences in performance across varying network conditions.

Quality of Service (QoS) refers to the overall performance level of a network, particularly in terms of data transmission quality and reliability. As networks evolve, maintaining QoS becomes paramount, especially for applications that require strict performance metrics. Factors such as latency, packet loss, and bandwidth utilization play significant roles in defining QoS. By strategically implementing scheduling algorithms like FIFO, PQ, and WFQ, network managers can influence these factors, striking a balance between competing demands across user requirements. This juggling act is particularly challenging in heterogeneous networks where diverse traffic types must be accommodated.

First-In-First-Out, often abbreviated as FIFO, is the simplest scheduling algorithm used in networking. This method processes packets in the exact order they arrive, akin to a queue at a retail store. Notably, FIFO does not distinguish between packet types or sources; instead, it ensures a straightforward and predictable packet handling process. This simplicity is advantageous in scenarios where processing speed is prioritized over packet prioritization.

For instance, in simple data exchanges where all packets possess similar characteristics—such as basic web browsing—FIFO's performance is adequate and often more efficient due to minimal overhead in managing multiple queues. However, in high-traffic environments, FIFO can be less efficient due to its inability to manage congestion effectively. In scenarios with mixed traffic types, the lack of prioritization can lead to increased latency for critical packets, impacting overall QoS.

Furthermore, FIFO can suffer from the “head-of-line blocking” phenomenon, where a large packet at the front of the queue prevents smaller, high-priority packets from being transmitted in a timely manner. This limitation underscores the need for a more sophisticated approach to traffic management in environments where packet diversity exists.

Priority Queuing, or PQ, introduces a layer of sophistication that is absent in FIFO. This algorithm allows for the designation of packets into different priority levels, ensuring that critical packets are processed first. PQ is particularly beneficial in networks where certain data packets, such as voice or video, require prioritized handling to maintain quality and integrity. The primary advantage of PQ lies in its ability to reduce latency for high-priority traffic, giving these packets preferential treatment in busy network scenarios.

In real-world applications, PQ is commonly deployed in Voice over IP (VoIP) systems and video conferencing tools, where the timely delivery of data packets is essential for maintaining call clarity and video quality. By marking packets based on their importance, network devices can effectively manage congestion and ensure that time-sensitive data is transmitted without unnecessary delays.

However, a significant downside is the potential for lower-priority traffic to experience indefinite delays, especially during network congestion. In cases where the network is consistently saturated, lower-priority packets can be starved of bandwidth, leading to degradation in service for non-critical applications. This trade-off may not be suitable for all environments and requires careful consideration by network administrators when designing their infrastructure.

Moreover, while implementing PQ, administrators must also consider the complexities involved in managing multiple queues across devices. This can introduce additional overhead in terms of configuration and maintenance. As a result, organizations often weigh the benefits of enhanced QoS against the operational costs associated with setting up and managing multiple queuing mechanisms.

Weighted Fair Queuing (WFQ) presents an advanced approach that seeks to balance fair access to network resources with efficiency. Unlike PQ, which strictly prioritizes traffic, WFQ assigns weights to different data flows, allowing for a proportional distribution of bandwidth. This ensures that each flow receives its fair share of resources while still accommodating priority levels based on the defined weights.

WFQ is particularly adept at managing diverse traffic types, making it a popular choice for modern networks supporting multimedia applications and mixed data traffic. For instance, in a network where video streaming, VoIP, and general web traffic coexist, WFQ can dynamically allocate bandwidth to ensure that all types of traffic receive adequate service without allowing lower-priority applications to starve.

The strength of WFQ lies in its adaptive bandwidth allocation capabilities, which can respond effectively to shifting network demands. This adaptability is crucial as user behavior changes and as network conditions fluctuate over time—whether due to spikes in user activity or the introduction of new applications requiring real-time data transport.

However, the implementation of WFQ can be more complex than FIFO or PQ, requiring a deeper understanding of network flows and demands. Network operators must accurately assign weights based on traffic characteristics, and this accurate assessment needs to be continuously monitored and adjusted as network conditions evolve. As such, while WFQ offers a sophisticated mechanism for traffic management, it also demands a higher level of operational sophistication and resource investment from network administrators.

| Algorithm | Mechanism | Advantages | Challenges |

|---|---|---|---|

| FIFO | Processes packets as they arrive | Simple and predictable; low overhead | Not ideal for high traffic; lacks prioritization; head-of-line blocking possible |

| PQ | Assigns priority levels to packets | Ensures quick processing of critical packets; reduces latency for high priority traffic | Lower-priority traffic may face indefinite delays; introduces complexity to queue management |

| WFQ | Distributes bandwidth proportionally among flows | Balances efficiency and fairness; adaptable and dynamic bandwidth allocation | Complex implementation; requires careful weight assignment and monitoring |

Determining which scheduling algorithm to implement requires careful consideration of various factors such as network architecture, user demands, and traffic characteristics. In environments with relatively uniform traffic patterns and lower bandwidth demands, FIFO may be perfectly adequate. Its lightweight nature means that it can handle workloads efficiently without added complexity.

On the other hand, environments that handle mixed or critical data traffic should consider implementing Priority Queuing. This method prioritizes essential services like real-time communications, effectively enhancing the end-user experience for applications where performance is critical. However, organizations should also implement measures to mitigate potential pitfalls, such as the starvation of lower-priority packets.

For organizations that must support a diverse array of services, Weighted Fair Queuing is often the preferred choice. While requiring a more sophisticated setup, WFQ can provide the necessary balance between performance and fairness, ensuring that all applications have equitable access to resources. This is particularly important in modern networks where IoT devices, multimedia, and other demanding services co-exist, creating a need for intelligent traffic management.

Each of the scheduling algorithms—FIFO, PQ, and WFQ—has its own set of applications depending on network demands. FIFO is very effective in systems where processing simplicity and speed are paramount, such as in straightforward data handling tasks, including email systems or basic file transfers. When the cost of latency is low, FIFO often suffices for straightforward operational requirements.

PQ proves invaluable in environments requiring guaranteed quality for critical applications, making it popular in Voice over IP (VoIP) and video conferencing. In these contexts, the degradation of service quality can lead to unacceptable user experiences. With PQ, network managers can ensure that voice packets or video streams are consistently prioritized, providing seamless communication regardless of overall network traffic conditions.

Meanwhile, WFQ finds its niche in complex, heterogeneous networks with varying requirements, such as those supporting cloud services, multimedia streaming, and real-time applications. Here, the ability to intelligently manage different traffic types ensures that critical services receive adequate resources while still allowing less urgent applications to function efficiently. Many enterprises utilize WFQ within their internal networks to maximize the performance of applications that derive value from real-time data streams or periodic updates. Utilizing WFQ also aligns with service-level agreements (SLAs) that many organizations maintain with their customers, ensuring that they can deliver on promised performance metrics.

Furthermore, networks that support applications like online gaming face unique challenges that require innovative scheduling strategies. Fast packet processing and minimal latency are crucial in these environments, making an adaptive method like WFQ a sensible choice. By doing so, network operators can cater to the dynamic nature of gaming traffic, providing a more responsive and engaging user experience.

The evolution of internet technologies continuously transforms the landscape of data network management. Emerging trends, such as the increase in Internet of Things (IoT) devices, expand the demands on scheduling algorithms. As more devices become interconnected, networks will face increased traffic loads featuring various types of data exchange—from simple sensor updates to extensive video data streams.

Consequently, professionals must frequently reevaluate their network strategies to incorporate advanced algorithms like WFQ or hybrid approaches that cater to specific network needs. This evolution often involves integrating machine learning and artificial intelligence techniques to predict traffic patterns more accurately and adaptively manage resources. As network complexity grows, the need for sophisticated, adaptive scheduling methods becomes increasingly evident.

Moreover, as cloud computing continues to expand, service providers are rethinking their approaches to network management. The shift from traditional data centers to cloud infrastructure requires algorithms that can efficiently handle the dynamic nature of cloud workloads. This demands a combination of virtualized environments and intelligent scheduling algorithms, optimizing resource utilization while maintaining sufficient QoS for all applications hosted in these environments.

In addition, the rise of mobile computing and edge networking creates new challenges and opportunities for scheduling algorithms. The exponential growth of mobile devices and applications necessitates novel solutions to prioritize traffic efficiently while considering physical proximity to resources. Edge computing promises reduced latency and higher responsiveness by processing data closer to where it is generated; however, this shift also complicates the traffic landscape. The algorithms employed must accommodate varying bandwidth requirements and dynamic user behavior, which puts additional emphasis on choosing the right scheduling techniques.

Understanding and selecting the appropriate scheduling algorithm is crucial for optimal network performance. While FIFO, PQ, and WFQ each offer unique advantages, their applicability varies based on network traffic patterns, infrastructure, and performance objectives. Selecting the most appropriate algorithm is about more than just understanding how they function; it involves comprehension of an organization's specific requirements and anticipated network conditions.

Lastly, as technology continues to advance, the need for adaptive and intelligent scheduling algorithms will only grow. Organizations must stay informed about industry trends and innovations to ensure their networks remain efficient, responsive, and capable of meeting users' ever-changing demands. By implementing the right scheduling strategies in tandem with maintaining awareness of technological advancements, networking professionals can position their organizations for success in the future.